Have you ever tried using ArcCatalog to look at a listing of your feature classes in an SDE Geodatabase to see when they were modified, only to be disappointed that no dates are displayed? I was:

If you’ve been around a while, remember that 1984 Wendy’s commercial with the old lady that kept saying “Where’s the beef?”:

Yeah ESRI, where’s the beef? You do this for a File Geodatabase:

Why not for SDE? Sure, I can use versioning and look for the date that way, but we are a small GIS shop here and only a few people do the editing anyway. What to do?

After talking with our friendly Oracle DBA, searching through some system tables, searching the ArcPy classes, and working on a SQL query, I finally came up with a nice Python script that will pull out the SDE Geodatabase feature classes (they are just tables) and list the creation and modification dates. The info is also saved to a CSV file so you can open it up in Excel.

Here is the script, free for you to use. ** See update 12/4/2017 in comments section **

# ---------------------------------------------------------------------

# get_sde_date.py

# Created on: 3/26/2014

# By: Michael A. Carson, GIS Manager, City of Burbank GIS

# Description: Queries SDE GDB in Oracle to get feature class table dates.

# ---------------------------------------------------------------------

# Import modules

import arcpy,sys,csv

# Python environment

arcpy.env.overwriteOutput = True

# Local variables

sde_conn_file = r"H:\testdir\sde_gdb_connection.sde"

output_file = r"H:\testdir\sde_fc_tables.csv"

sql_statement = "select OWNER,OBJECT_TYPE,OBJECT_NAME,CREATED, \

LAST_DDL_TIME from DBA_OBJECTS where OWNER = 'GDB' \

and OBJECT_TYPE = 'TABLE'"

# Execute SQL statement and save table list

try:

# Make data path relative

arcpy.env.workspace = sys.path[0]

# Use valid SDE connection file to connect to SDE GDB in Oracle

sde_conn = arcpy.ArcSDESQLExecute(sde_conn_file)

print("=============================================\n")

print("Execute SQL Statement: {0}".format(sql_statement))

try:

# Pass the SQL statement to the database

sde_return = sde_conn.execute(sql_statement)

except Exception as err:

print(err)

sde_return = False

# If the return value is a list, display each list as a row

if isinstance(sde_return, list):

for row in sde_return:

print(row)

f = open(output_file, 'wb')

writer = csv.writer(f, delimiter=',')

writer.writerow(['OWNER', 'TYPE', 'NAME', 'CREATED', 'MODIFIED'])

writer.writerows(sde_return)

f.close()

print("Number of rows returned by query: {0} rows".format(len(sde_return)))

print("=============================================\n")

else:

# If the return value was not a list, the statement was most likely a DDL statement

if sde_return == True:

print("SQL statement: {0} ran successfully.".format(sql_statement))

else:

print("SQL statement: {0} FAILED.".format(sql_statement))

print("=============================================\n")

except Exception as err:

print(err)

Keep in mind this is for an Oracle SDE Geodatabase. I am also using ArcGIS 10.1. It will probably NOT work for SQL Server or PostgreSQL. Post a comment if you get it to work on those databases.

So how does this work? Looking at the local variables section, you need to change the value for the sde_conn_file and sql_statement. You can also change the output_file name if you want too.

# Local variables

sde_conn_file = r"H:\testdir\sde_gdb_connection.sde"

output_file = r"H:\testdir\sde_fc_tables.csv"

sql_statement = "select OWNER,OBJECT_TYPE,OBJECT_NAME,CREATED, \

LAST_DDL_TIME from DBA_OBJECTS where OWNER = 'GDB' \

and OBJECT_TYPE = 'TABLE'"

The script uses your SDE connection file to connect to the SDE Geodatabase … AS SDE. This is important. You must connect as the SDE user since the SDE user has privilages to read some of the Oracle system tables that store the info we want to extract. So where can you find your connection file? You probably used ArcCatalog to make your connection to the SDE Geodatabase. Here are some of mine:

They are listed under Database Connections in the ArcCatalog Tree. These connection files are located under your user directory (I’m assuming you are using Windows 7). I found mine located under here:

C:\Users\mike\AppData\Roaming\ESRI\Desktop10.1\ArcCatalog\

The connection files are named the same as you see them in ArcCatalog. I just copied the one that connects as the SDE user and copied it to my workspace for the script. If you don’t want to copy the file, just set the sde_conn_file variable to point to wherever it is. The nice part about using a connection file is that you don’t expose your SDE user password in the Python script.

Now the SQL statement:

sql_statement = "select OWNER,OBJECT_TYPE,OBJECT_NAME,CREATED, \

LAST_DDL_TIME from DBA_OBJECTS where OWNER = 'GDB' \

and OBJECT_TYPE = 'TABLE'"

Note the “\” is just a Python line continuation character that I used to make this fit in this blog (and still run in Python as you see it). Normally I would have the whole thing on one line! Anyway, here is where you want to modify the owner. I have an owner, the database user named GDB, that I use to create and modify some of our feature classes in the SDE Geodatabase. I want to pull out just those tables that are associated with user GDB. If you have more users that edit data, you can change the owner part of the SQL statement this way:

(OWNER = 'USER1' or OWNER = 'USER2' or OWNER = 'USER3')

Everything else should stay the same.

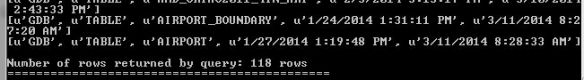

Now run your Python script. It should connect to your SDE Geodatabase using the ArcPy class ArcSDESQLExecute and your connection file, then execute the SQL statement, return a list of records with the owner name, object type, creation date, and date last modified, then write the list to the output CSV file. Here is the output when I ran my script:

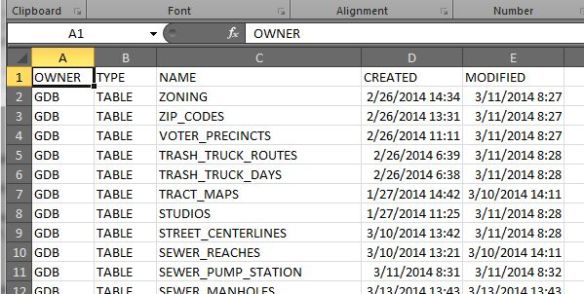

And here is my CSV file:

The one thing I don’t like is the default date/time format Excel sets for the CREATED and MODIFIED column. To change it, I select both columns, right click to select Format Cells, then use a custom date/time setting like so:

You can pick from a list or enter what you want like I did. Much better!

Remember, everything that is listed is a table. Your feature classes are just tables as well. You might notice in your output that there are tables listed that are not feature classes, like indexes (_IDX$) and location tables for geocoding (_LOX). You could filter those out in your SQL statement. For me, I like to see everything.

You could also modify this script and run it as a scheduled task for a monthly report, thus helping you identify a volume of modifications to your data over time. Of course your friendly DBA could probably do that from the database side of things, but then you would not have fun doing this by yourself!

Please, if you modify the script to work with SQL Server or PostgreSQL, tell us how by posting a reply. Did I mention that before?

So there you have it, the ability to get at the modification date for feature classes in an Oracle SDE Geodatabase. Maybe ESRI will change it so we can see it in ArcCatalog in the next version? Time will tell. Enjoy. -mike

I wish I was more of a scripter/programmer! It’s always annoyed me that you can’t just “see” when something was edited. I have a “Date” field for my features, but then you have to open up the feature class and search. You’re right …

I tested this against SDE 10, oracle 10g works great.

I modified the sql statement to return A, D, F, and S table grouped with the business table for clarity.

–this prints the created and last DDL time for versioned and noneversioned data in SDE. it will print the associated A, D, F, S table as well.

–change owner info as necessary for the cursor.

–taoli@cityofpasadena.net

Declare

CURSOR tableinfo_cursor1 IS

SELECT owner,table_name,registration_id

From Sde.Table_Registry

WHERE BITAND(sde.table_registry.object_flags, 8)=8 and owner in (‘POWER’, ‘WATER’)

Order By Registration_Id;

CURSOR tableinfo_cursor2 IS

SELECT owner,table_name,registration_id

From Sde.Table_Registry

Where Bitand(Sde.Table_Registry.Object_Flags, 8)8 and owner in (‘POWER’, ‘WATER’)

Order By Registration_Id;

Begin

Dbms_Output.Put_Line (‘Versioned Data’);

For Table_Info In Tableinfo_Cursor1

Loop

Dbms_Output.Put_Line (Table_Info.Table_Name);

For Rec In (Select Owner, Object_Name, Object_Type, To_Char(Created, ‘YYYY/MM/DD HH24:MI:SS’) Created, To_Char(Last_Ddl_Time, ‘YYYY/MM/DD HH24:MI:SS’) DDLTIME

From Dba_Objects Where Object_Name = Table_Info.Table_Name

Union All –use union all so no sorting

Select Owner, Object_Name, Object_Type, To_Char(Created, ‘YYYY/MM/DD HH24:MI:SS’) Created, To_Char(Last_Ddl_Time, ‘YYYY/MM/DD HH24:MI:SS’) Ddltime

From Dba_Objects Where Object_Name = ‘A’ || Table_Info.registration_id

Union All

Select Owner, Object_Name, Object_Type, To_Char(Created, ‘YYYY/MM/DD HH24:MI:SS’) Created, To_Char(Last_Ddl_Time, ‘YYYY/MM/DD HH24:MI:SS’) Ddltime

From Dba_Objects Where Object_Name = ‘D’ || Table_Info.Registration_Id

Union all

Select Owner, Object_Name, Object_Type, To_Char(Created, ‘YYYY/MM/DD HH24:MI:SS’) Created, To_Char(Last_Ddl_Time, ‘YYYY/MM/DD HH24:MI:SS’) Ddltime

From Dba_Objects Where Object_Name = ‘F’ || Table_Info.registration_id

Union All

Select Owner, Object_Name, Object_Type, To_Char(Created, ‘YYYY/MM/DD HH24:MI:SS’) Created, To_Char(Last_Ddl_Time, ‘YYYY/MM/DD HH24:MI:SS’) Ddltime

From Dba_Objects Where Object_Name = ‘S’ || Table_Info.registration_id)

Loop

Dbms_Output.Put_Line(Rec.Owner || ‘.’ || Rec.Object_Name || ‘, ‘ || Rec.Created || ‘, ‘ || Rec.DDLTIME);

End Loop;

End Loop;

Dbms_Output.Put_Line (‘Non-versioned Data’);

For Table_Info In Tableinfo_Cursor2

Loop

Dbms_Output.Put_Line (Table_Info.Table_Name);

For Rec In (Select Owner, Object_Name, Object_Type, To_Char(Created, ‘YYYY/MM/DD HH24:MI:SS’) Created, To_Char(Last_Ddl_Time, ‘YYYY/MM/DD HH24:MI:SS’) DDLTIME

From Dba_Objects Where Object_Name = Table_Info.Table_Name

Union All –use union all so no sorting

Select Owner, Object_Name, Object_Type, To_Char(Created, ‘YYYY/MM/DD HH24:MI:SS’) Created, To_Char(Last_Ddl_Time, ‘YYYY/MM/DD HH24:MI:SS’) Ddltime

From Dba_Objects Where Object_Name = ‘F’ || Table_Info.registration_id

Union All

Select Owner, Object_Name, Object_Type, To_Char(Created, ‘YYYY/MM/DD HH24:MI:SS’) Created, To_Char(Last_Ddl_Time, ‘YYYY/MM/DD HH24:MI:SS’) Ddltime

From Dba_Objects Where Object_Name = ‘S’ || Table_Info.registration_id)

Loop

Dbms_Output.Put_Line(Rec.Owner || ‘.’ || Rec.Object_Name || ‘, ‘ || Rec.Created || ‘, ‘ || Rec.DDLTIME);

End Loop;

End Loop;

End;

Although this script/SQL does replicate the behavior of the ‘Modified’ column in ArcCatalog with file geodatabases, it doesn’t address the larger issue that both the ‘Modified’ column and LAST_DDL_TIME only look at when the structure of the table was changed, not when the contents of the table changed. Unfortunately for most users, seeing a ‘Modified’ column leads them to think of when the data was last modified and not the table structure itself, and I can’t blame them for thinking this way? When a MS Excel or even a text file has its contents updated, the file modified time stamp gets updated, but that isn’t necessarily the case with Esri’s interpretation of ‘modified.’

Regarding the SQL from a commenter for including A, D, F, and S tables; the SQL as written includes versioned tables in the ‘Non-versioned Data’ output. I am not sure if that is the intended output.

If someone wants to include the associated A, D, F, and S tables and doesn’t care about splitting out versioned and non-versioned tables, there is a pretty straightforward non-cursor approach to getting the information.

SELECT r.table_name

,a.object_name

,To_Char(a.created, ‘YYYY/MM/DD HH24:MI:SS’) CREATED

,To_Char(a.last_ddl_time, ‘YYYY/MM/DD HH24:MI:SS’) LAST_DDL

FROM all_objects a, sde.table_registry r

WHERE a.owner = ‘???’

AND r.table_name NOT LIKE ‘GDB_%’

AND (a.object_name = ‘A’ || r.registration_id

OR a.object_name = ‘D’ || r.registration_id

OR a.object_name = ‘F’ || r.registration_id

OR a.object_name = ‘S’ || r.registration_id

OR r.table_name = a.object_name)

ORDER BY r.table_name, a.object_id

please can anybody tell me how to simply timestamp my geodatabase file

Hi, thanks for the script, Everything runs smoothly until I get an error message :

Execute SQL Statement: select OWNER,OBJECT_TYPE,OBJECT_NAME,CREATED, LAST_DDL_TIME from DBA_OBJECTS where OWNER = ‘RYMA.ANELIUNAS’ and OBJECT_TYPE = ‘TABLE’

‘ascii’ codec can’t encode character u’\uec5c’ in position 52: ordinal not in range(128)

Can anyone tell me how to fix it? I am not a programmer. Cheers

Hi Ryma. Are you using a character with an accent, maybe in the user name? Usually you get a unicode error if you have a character over a 127 value, but I don’t see it in your statement. First, try changing your code in the line that sets the sql_statement variable to one long line like this:

sql_statement = “select OWNER,OBJECT_TYPE,OBJECT_NAME,CREATED,LAST_DDL_TIME from DBA_OBJECTS where OWNER = ‘RYMA.ANELIUNAS’ and OBJECT_TYPE = ‘TABLE'”

See if that works. If not, add .encode at the end like this:

sql_statement = “select OWNER,OBJECT_TYPE,OBJECT_NAME,CREATED,LAST_DDL_TIME from DBA_OBJECTS where OWNER = ”RYMA.ANELIUNAS’ and OBJECT_TYPE = ‘TABLE'”.encode(“utf-8”)

and see if that works. Let me know. -mike

Hi, Thanks for the code.

I am getting the same error. Even with the above correction. Is anyone else still getting the same error.

UPDATE 12/4/2017: After upgrading to 10.5 or 10.5.1, my python script fails to bring over the creation and last modified dates. They are blank. This is probably due to a bug: https://community.esri.com/thread/197575-arcsdesqlexecute-from-arcpy-of-desktop-105-cannot-read-date-column . The workaround is change the line in the python script that sets the sql_statement variable. Try this instead:

sql_statement = “select OWNER,OBJECT_TYPE,OBJECT_NAME,to_char((CREATED), ‘MM/DD/YYYY HH24:MI:SS’),to_char((LAST_DDL_TIME), ‘MM/DD/YYYY HH24:MI:SS’) from DBA_OBJECTS where OWNER = ‘GDB’ and OBJECT_TYPE = ‘TABLE'”

All it does is change the date info from the CREATED and LAST_DDL_TIME fields into text. Note your OWNER setting will be different. That should fix it! -mike

I get the following error.. ArcSDESQLExecute: StreamPrepareSQL ArcSDE Error -37 DBMS table not found Any ideas? Running 10.6.1

Hi Terry. What database are you using? PostgreSQL? SQL Server? Oracle?

Sorry, Oracle..

I know this thread is kind of old, but I’m curious if anyone has replicated it on a Microsoft SQL Server instead of Oracle and if they’d be willing to share their workflow?